As cloud computing and AI become essential to modern business, their environmental impact is increasingly under the microscope. Leading cloud providers—Amazon, Google, and Azure—have made significant commitments to reduce their carbon footprints. Amazon, the largest corporate purchaser of renewable energy, aims for net-zero carbon emissions by 2040. Google is working toward 24/7 carbon-free energy by 2030, while Azure aims to be carbon negative by 2030. These efforts are commendable, but as AI becomes more prevalent, the environmental challenge shifts: how do we measure and reduce the carbon footprint of AI applications themselves?

This is where Software Carbon Intensity (SCI) comes in. As defined by Green Software Foundation, SCI is a metric designed to track the carbon emissions of software, providing a clear, actionable way to understand the environmental impact of AI. Unlike traditional emissions tracking, SCI is tailored to the unique demands of AI, which often involves resource-intensive processes like training large language models or running complex inference tasks.

What is Software Carbon Intensity (SCI)?

SCI measures the carbon footprint of software applications by combining energy consumption, the carbon intensity of the power grid, and the environmental cost of the hardware running the software. The formula for SCI is:

SCI = (E * I) + M / R

Where:

- E represents the energy consumed by the software.

- I is the carbon intensity of the energy grid powering the data center.

- M accounts for the embodied emissions of the hardware, which includes the carbon footprint of manufacturing, transportation, and disposal.

- R is the functional unit, such as the number of users or API calls, allowing SCI to scale based on software usage.

For AI applications, particularly large-scale models, SCI is crucial because the energy demands for training models, running inference, and maintaining hardware infrastructure are significant. Each API call or user query adds to the cumulative carbon footprint.

How to Calculate SCI for AI Applications

To calculate SCI for an AI application, you must consider all stages of the AI lifecycle:

- Training: Large models require massive computational resources during training, which is a one-time but energy-intensive process.

- Inference: Every user request or query to an AI system generates emissions, especially for high-demand services like chatbots or recommendation systems.

- Embodied Emissions: The hardware used for AI—servers, data centers, and networking equipment—contributes embodied emissions over its lifecycle.

For example, an AI model might be trained on a data center powered by a mix of renewable and non-renewable energy sources. If that data center is located in a region where coal is a dominant energy source, the I factor (carbon intensity) will be high, increasing the overall SCI. Likewise, if the hardware is older and less efficient, the M factor (embodied emissions) will also raise the SCI.

Reducing SCI Through Product Strategy

Once you understand the SCI of your AI application, the next step is to reduce it. Here are strategic approaches to lowering SCI while maintaining product performance and scalability:

- Optimize AI Models: This means streamlining algorithms and optimizing models can drastically reduce the energy required for both training and inference. For instance, techniques like model pruning, prompt engineering, and quantization can make AI models more efficient without sacrificing accuracy. Th key is to maintain relevancy of context.

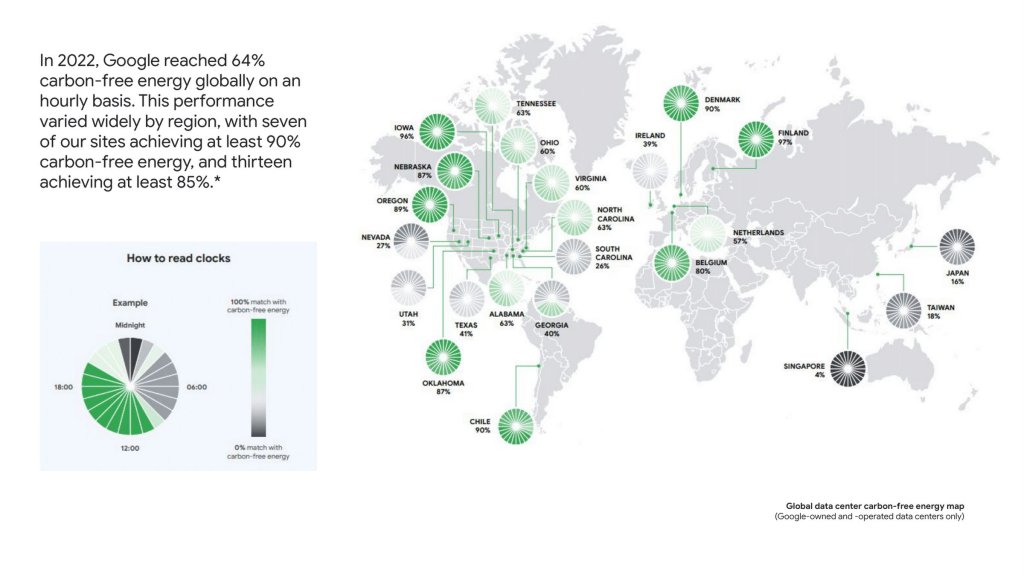

- Leverage Green Energy: Choosing data centers that run on renewable energy is critical. Many cloud providers now offer the ability to select data regions powered by low-carbon or carbon-free energy sources. Google and Microsoft, for example, have made substantial investments in 24/7 carbon-free energy, making them strong partners in reducing the I factor in SCI.

- Dynamic Scaling: Use infrastructure that scales with demand. This ensures you’re not consuming more energy than necessary during off-peak times. For AI applications, this could mean dynamically allocating computing resources based on real-time demand, minimizing idle infrastructure.

- Extend Hardware Lifecycles: Consider the hardware lifecycle in your strategy. By opting for more efficient hardware, reusing equipment, and investing in technologies that reduce the embodied carbon of servers, you can bring down the M factor in the SCI formula. Additionally, AI applications should aim to run on the most energy-efficient hardware available, such as specialized AI chips that consume less power than traditional CPUs or GPUs.

- Measure and Monitor: Regularly track your application’s SCI to understand its carbon impact and identify areas for improvement. Many cloud providers now offer tools that allow users to monitor their energy consumption and emissions in real-time, helping to make data-driven decisions for reducing SCI.

The Future of Sustainable AI

As AI continues to grow in both capabilities and ubiquity, its environmental impact cannot be overlooked. SCI provides a framework for measuring this impact, helping organizations create more sustainable software products. With cloud providers like Amazon, Google, and Azure leading the charge in renewable energy and carbon reduction, integrating SCI into product strategy will not only help companies meet their sustainability goals but also align them with the future of green technology.

Incorporating SCI into product development ensures that AI innovation moves forward without sacrificing the planet’s future. By optimizing energy use, leveraging green energy, and managing hardware efficiency, businesses can build powerful, scalable AI systems that leave a lighter footprint on the environment. In the end, reducing SCI isn’t just about cutting emissions—it’s about creating a sustainable foundation for the future of technology.

Leave a reply to How to Apply Carbon Accounting to measure Carbon Footprint of an AI application – EcoShop – Climate curious product and strategy discussion Cancel reply